When we think about electricity the first name to come to mind is famed American inventor Thomas Edison, who filed the first of his more than 1,000 patents at the age of 21. In 1879, at the age of 32, Edison invented what today we call the light bulb. It was a tremendous achievement; the gas lamps that electric bulbs eventually replaced left long black streaks up the sides of buildings, emitted toxic fumes, and frequently caused fires and explosions which destroyed homes and entire city blocks.

There was only one problem with the electric light bulb: practically no one had electricity. Or, to think about it differently, an excellent application was developed, but there was no bandwidth to enable it. The electric light bulb without electricity was like Facebook without the Internet, a great idea, but practically useless.

The following year Edison set out to develop an electric grid to supply homes and businesses with electricity so that they would purchase his light bulbs and other appliances. In 1882 Pearl Street Station in New York City became the world’s first central power plant to distribute electricity with direct current. In just two years Pearl Street Station was serving 508 customers with 10,164 electric lamps.

Interior of Pearl Street Station, circa 1883

Pervasive persistent electricity would shape and revolutionize the 20th century. Electrified assembly lines enabled the affordability of the Model T. Efficient manufacturing led to a growth in accounting, marketing, human resources, and, hence, the American middle class. By the middle of the century this new American middle class spent much of their extra money on electric appliances (all of which could, crucially, plug in to standard outlets) that freed American housewives from washing dishes, scrubbing clothes, and heating up irons (which, yes, used to be made of iron). The electric revolution – like the industrial revolution before it – led to a cognitive surplus, which we invested in the television sitcom, another direct result of distributed electricity.

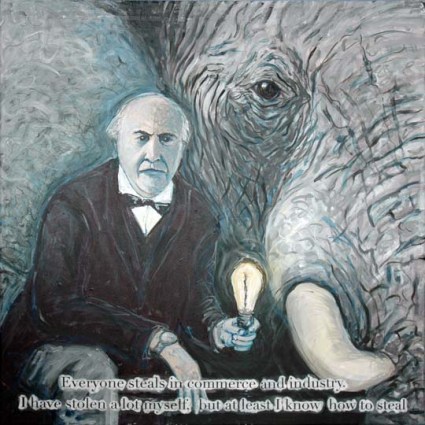

The Sacrificial Elephant

But it was not Edison’s direct current distribution of electricity that catalyzed so much social change. As Josh Clark and Chuck Bryant point out in an entertaining podcast, Thomas Edison was a brilliant inventor, but an even more brilliant self-promoter. Nikola Tesla, on the other hand, was at least as brilliant, but one of the lousiest self-promoters in the long impressive history of self-promotion.

The problem with direct current is that it is a terribly inefficient way to transmit electricity over long distances. In order to create a national electric grid based on direct current, we would need central power plants in just about every neighborhood. Electricity would need to be produced locally. Tesla’s invention, alternating current, allowed for electricity to travel across great distances without much loss in the process. American businessman George Westinghouse immediately understood the significance of Tesla’s discovery: electricity could be produced centrally for far cheaper and then distributed all over the country via the wide network of power lines that today we take for granted as we drive down every major highway in the world.

“Edison and Topsy”, Acrylic on Canvas, 2006

The War of Currents, which led most horrifically to the electrocution of Topsy the elephant (video here), raged on until 1893 when Westinghouse won the contract to build the hydroelectric generators at Niagara Falls which used alternating current and bore Nikola Tesla’s name. The rest was history. From Wikipedia: “AC replaced DC for central station power generation and power distribution, enormously extending the range and improving the safety and efficiency of power distribution.”

Toward the World Wide Computer

For Nicholas Carr – best known for his Atlantic article “Is Google Making Us Stupid?” – the triumph of alternating current over direct current, of centralization over decentralization, is the historic parallel of what is happening in today’s software industry. The centralization of software, computing, and storage was made especially evident last week when Google announced that it will be releasing a lightweight operating system to connect affordable netbooks to the company’s popular online applications including Gmail, Google Calendar, Picasa, Reader, and Documents.

With undisguised regret, Carr says that the centralization of software from applications installed on individual computers to applications installed on massive data centers is inevitable. Let’s take a feed reader application as an example. The most popular desktop feed reader application for Apple computers is NewsFire, which stores all of your data on your own computer. Every time NewsFire is updated all of its users must download a new copy of the application. If something happens to your hard drive, then all of your data is erased. Furthermore, if you want to share information from your feed reader – either a single article or an entire folder of feeds – then you must send that data via email to each individual recipient. NewsFire is the direct current of feed readers.

Photo of inside a data center. Taken from a blog post on cloud computing by Google software engineer Mark Chu-Carroll.

Google Reader is the feed reading equivalent of alternating current. Unlike NewsFire, there are not millions of copies of Google Reader installed on individual computers. Rather Google Reader is a single application with millions of users all over the world. The computing power required by the application and the storage of data all takes place at at Google’s massive data centers. When a new feature is added to Google Reader you don’t need to download the latest version of the application. Rather, it loads automatically in your browser. The data is stored across millions of hard drives all around the world so even if one hard drive fails, your data is still safe. If I want to share my recent favorite articles or even my entire folder of Liberian bloggers all I have to do is select the option.

Desktop applications just can’t compete with the efficiency of server-based applications, especially when they are data intensive and frequently updated, two characteristics that increasingly define the software we depend on from Wikipedia to Google Maps. Slowly but surely software development is moving from desktop applications that exist on each individual computer to massive data centers, often called “the cloud“, or in Carr’s words, “the worldwide computing grid” and the “World Wide Computer”.

From Making Content to Making Sense of It

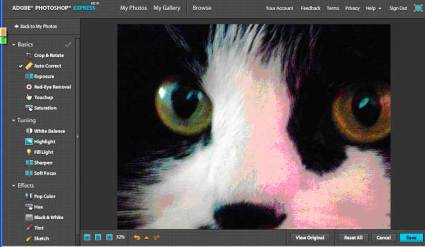

Every major software company is already in the process of moving its applications and data from the desktop to the cloud. Google’s forthcoming operating system is the most explicit example, but Apple’s Mobile Me program, and Window’s Midori project have the same general purpose: to move data from the desktop to the cloud where it is accessible from multiple sources (mobile phone, laptop, desktop, television) and stored on massive and massively cheap hard drives. There is already an online version of Photoshop and Adobe plans on making similar versions available for its entire creative suite of applications. Soon you will be able to produce professional quality photos, audio, and video using applications that don’t exist on your computer.

Adobe’s free online version of Photoshop

But it’s not in producing content where cloud computing excels, but rather in aggregating, sorting, filtering, and giving meaning to content that has already been produced. When the personal computer was invented we all wanted to create digital versions of our analog archives. We transcribed handwritten journals to text documents, scanned in shoebox upon shoebox of photographs, and digitized video from VHS tapes. Five years later and many of us had practically forgotten how to write with a pen. We snapped 100 photographs a day with our digital cameras, and filled up entire terabyte hard drives with HD digital video from summer vacations. All of a sudden our need wasn’t to produce content but to share it and make sense of it.

The Melting Away of the Global Ice Industry

What is the societal impact of transitioning from a world wide web of networked computers to the World Wide Computer? This is the fundamental question that Isaac and I will examine with an all star cast of speakers at this year’s Ars Electronica Symposium. A few obvious effects are frequently discussed. For one thing, software gets a lot more social, encouraging interaction among users, the sharing of data, and sometimes even collaborative creation of the software itself. It also gets a hell of a lot cheaper. So cheap, in fact, that Chris Anderson makes the controversial argument that free is the future of business Indeed, when was the last time you paid for anything online?

Of course there are always winners and losers during every major chapter of technological change. As Carr notes, “while electrification propelled some industries to rapid growth, it wiped out others entirely,” most notably the once-mammoth global industry of delivering ice to preserve food. The World Wide Computer and its various applications – Twitter, Wikis, Blogspot, WordPress, and Google Search – makes it so easy for ordinary citizens to publish and find news, information, and analysis that traditional journalism isn’t able to charge for what is essentially the same product: relevant information. My own opinion is, “who needs a global ice industry when you have refrigerators?” But for Carr the loss of traditional journalism is tragic. In fact, Carr considers most of the effects of the cloud to be negative: our attention span shrinks, we become more dependent on technology, we don’t control our own data, our privacy is at risk, we help corporations make profits by producing free content for them, we become more isolated and less satisfied.

Carr is far from the only pundit to focus on the negative aspects of the cloud. Last year Benjamin Mako Hill and fellow open source advocates published the Franklin Street Statement on Freedom and Network Services, which argues that cloud computing takes power away from the users and gives it to the for-profit corporations that host their data. Furthermore, if you thought it was difficult to migrate your data from Microsoft Outlook to Thunderbird, just try moving it from Flickr to Expono or from YouTube to Blip. Once you surrender your data to the cloud, you tend to be locked in to a particular company. Also, when you store your data on your own computer it is up to you to back it up. But when you store it on the cloud a massive data loss could mean the end of your data and that of thousands of others, as happened recently to Ma.gnolia users. Finally, just as alternating current depends on increased voltage to travel over long distances, server based software depends on fast internet connections to deliver the data from server farms to cyber-cafes in Ghana. The problem is that cyber-cafes in Ghana don’t yet have fast enough internet connections to support online applications like Picnik and JayCut. So even though cloud computing has brought powerful applications down to the cost of free, limited bandwidth still restricts their usage to broadband subscribers in the developed world.

Literary Genre: Decentralization Nostalgia

My one complaint with the book is that Carr, while lambasting our current age of distraction, often becomes distracted himself from the book’s theme of centralized computing to loquacious dystopian rants about technology in general. Quoting the playwright Richard Foreman, Carr writes: “I come from a tradition of Western culture in which the ideal (my ideal) was the complex, dense, and ‘cathedral-like’ structure of the highly educated and articulate personality – a man or woman who carried inside themselves a personally constructed and unique version of the entire heritage of the West. I see within us all (myself included) the replacement of complex inner density with a new kind of self – evolving under the pressure of information overload and the technology of the ‘instantly avaiilable.'” Foreman concludes that we are turning into “pancake people – spread wide and thin as we connect with that vast network of information accessed by the mere touch of a button.”

(This idea of “pancake people” will most definitely form the basis of Carr’s forthcoming book “The Shallows: Mind, Memory, and Media in an Age of Instant Information.” Futurist Jamais Cascio responded to Carr’s essay “Is Google Making Us Stupid?” in the current issues of The Atlantic.)

The Big Switch is categorized as a “Science/Technology” book, but in my personal library I would put it on the “Nostalgia” bookshelf along with Michael Pollan’s The Omnivore’s Dilemma. Both Carr and Pollan are aware of the impressive efficiencies of centralized agriculture and centralized computing, but they are also both nostalgic about our decentralized past when both food and information were produced, sold, and consumed locally.

I sympathize with both authors, and I do believe that the tension between centralization and decentralization will be a major feature of this century. But until I see a large percentage of Internet users close down their Gmail accounts in favor of applications produced by local developers, or give up the grocery store for the farmers market, I would place my bet on continued centralization for the time being.

Oso, great article you wrote. You have a great way of distilling the technological information and ideas into plain English for the non-tech person like me. Your article made me understand what the fuss and importance is of cloud computing. My concern with cloud computing is privacy. But, I’m sure somebody will come along and solve that problem. After all, nothing is really private in this day and age, all of your info can be found on Zabasearch. Saludos! tacosam

BTW, did you see the Nova special on PBS about Captcha and re Captcha. Pretty neat stuff.

Wow. I don’t know how you put out so much eloquently processed text. My main future nostalgia is about what will be left of all our electronically memorialized record should a big electromagnetic storm force the planet back to analog. Always the optimist. -M.